Revisiting Axon Server in Containers

In my previous Blog on running a containerized Axon Server, I spent just a little bit of text on the challenges of using a single StatefulSet scaled to the number of nodes we want in the cluster. Also, I completely skipped the subject of using Helm to manage the deployment. However, both subjects do merit more attention, so let’s do just that.

The Kubernetes Deployment Model

Kubernetes is a great platform for managing large amounts of small apps, especially if those apps can be run in multiple instances, with minimal or no state. In short, it is perfectly suited for running microservices and small components like “Functions” or “Lambdas”. However, saying it is only for those types of workloads is selling it short because you can deploy many traditional stateful applications.

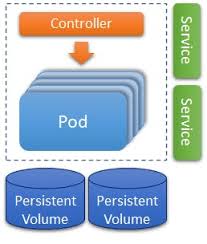

A typical deployment in Kubernetes will use a “Controller” to monitor the Pod’s health and restart it automatically as needed. It can also take care of migrating the deployment to a different ClusterNode if the current one needs to free up some space or vacate for maintenance. The controller can also scale the deployment to use multiple Pod copies, depending on configured criteria or an explicit target. Finally, you can add other (optional) components surrounding the Pod and its controller to provide network access points and persistence.

Communication to the outside world will use one or more Services, which expose ports to other parts of the cluster or even the outside world. These services can do this for an individual Pod and a scaled set of them, optionally integrating with Load Balancers and Ingress Controllers.

Given that a Pod is a transient thing, its disk storage will be lost when stopped or replaced. This need not be a problem as it can connect to a database for long-living data, but what if the Pod is a database itself? Persistent Volumes are the generalized interface to disk storage, and they can be used to connect to (provider dependent) long-living disks.

With that last point, we immediately see where Axon Server may need some special attention when we run it in Kubernetes, as we will be adding Axon Framework-based applications (microservices) that depend on it being available. Additionally, for event sourcing to work, we’ll need to guarantee that the data is indeed persistent, so the Pods running Axon Server need not just any available volume but a volume belonging to the specific Axon Server node running in that Pod. This is what a StatefulSet adds to a Deployment: Pod/container hostnames are made predictable (name of the set plus a number starting from zero) and volume claims that will link the volume to that specific Pod instance. This link will hold when the Pod is, e.g., migrated to another worker node, which leads to potentially complex interplays with the provider’s infrastructure when the other node is in a different Availability Zone.

So does this solve all our concerns concerning Axon Server? Can we cluster it by just scaling the StatefulSet to the number of nodes we want to have?

Pod Health Monitoring and Restarting

As I noted in the previous section, Kubernetes employs a Controller that can automatically restart a Pod when it is unhealthy, start extra copies if stressed, or reduce the number of running copies if there is not enough work. Several standard implementations of such controllers are available that take care of one or more of these aspects. This means we must be able to provide it with some indication of health and/or load. Axon Server is built using the Spring-boot toolset, which includes support for “actuators”; REST endpoints can provide such data. The health endpoint for Axon Server Enterprise Editions actually provides a comprehensive view of the complete cluster’s status, which means it will return a “WARN” status (and a non-200 HTTP response code) if any of the nodes has a problem. From a Kubernetes deployment perspective, that is not a good starting point because it would prompt a restart of all nodes even when there is a transient problem on only a single node. Additionally, if there is a problem in one out of several replication groups, the node could still be considered healthy, and other actions could be indicated to restore it to normal operation. For this reason, we advise against using the health actuator as an indicator for “terminal” problems. A better approach is to use the “Info” actuator (at REST endpoint “/actuator/info”), which will always return an “OK” response as long as the application can serve requests.

The next thing we need to look at is the difference in lifecycle phases for a node. When Axon Server starts, it will first perform several consistency checks on the event store. For example, if the event store was copied from a backup, and no indices were found, it will generate those before declaring itself ready for service. Even if those kinds of activities aren’t needed, a large number of contexts can cause a significant wait while it checks the last few segments for each. If at the same time we have a health probe that requires some response within 5 seconds, Kubernetes may decide the node isn’t healthy and replace it with yet another new one. A second probe named the “readinessProbe” is specifically for this scenario and can specify a longer initial wait.

The other delay we need to look at is concerned with the behavior at shutdown. When a Pod is deleted, the Pod’s main command is sent a SIGQUIT signal, after which the Controller will wait a configurable amount of seconds before it decides the process is unwilling (or unable) to perform a clean shutdown. The second attempt uses a signal named SIGKILL, which is exactly as destructive as it sounds. For Axon Server, a SIGQUIT is taken as a request to shutdown cleanly, causing it to flush all its buffers to disk. A SIGKILL will prevent this from happening and may cause inconsistent data in ControlDB, data files, or indices. These could then cause trouble at the next start or even later when it tries to continue storing data where it left off. For this reason, it is better to use a larger value than the default of 30 seconds, or at the very least monitor actual shutdown performance, to get a notification when a larger setting becomes necessary.

Kubernetes Scaling and Axon Server’s “Statefulness”

Kubernetes controllers can scale a Pod to get several, initially identical, copies of our application as stated above. From a Kubernetes perspective, these multiple copies should serve the same purpose, so it doesn’t matter for the “application” which of these copies picks up a request. I put the word “application” between quotes because it doesn’t really matter who provides the work that these Pods will perform. Some external application that sends in requests through an API or the Pods themselves may be part of an application that requires their services. If the load on the Pods increases, you could add new copies (scaling-up), or when the load decreases, you can shut a few down (scaling down). If the Pods use Persistent Volumes (PV for short), this may help to reduce the startup time, allowing them to continue where they left off. When using PV Claim (or PVC for short) Templates with StatefulSets, you provide Kubernetes with a template for the claim on the amount of space that volume needs to provide, so it can create actual claims when the Pod is first instantiated, and the Kubernetes implementation will take care of finding some way to fulfill that claim. Because the Pod's name is predictable, and the PVC will be linked to that particular Pod-name, it will stay around even when the set is deleted, ready to be reused as soon as it appears again.

For Axon Server, a cluster is built from nodes that share the same application, but their states may be wildly different. For example, if you have a cluster with five nodes, you may have different sets of three nodes (out of those five) sharing a replication group. This means that if you shut down a random node, some replication groups may be downgraded from three to two nodes while others still have all three nodes available. If you shut down a second node, you could have replication groups with only a single node left, which would render them unable to reach a consensus on the data to be stored. On the other hand, the Kubernetes model assumes that having a certain number of Pods available is enough, not a selection of specific ones. Also, if, for whatever reason, Kubernetes needs to vacate a worker node, let’s say that this node needs to receive a software upgrade, then all Axon Server Pods that happen to run there will need to be migrated. We need to ensure this does not influence the availability of the Axon Server cluster.

The final problem with the Kubernetes scaling model has to do with shutting down specific Axon Server nodes for maintenance. Even though a lot of effort is put into ensuring that no data is permanently lost, reality forces us to keep in mind we may be forced to help recovery and some manual work, say in the event the PV of a single instance is lost due to hardware problems. A StatefulSet can be scaled down until the Pod we want to stop has been stopped, but that also stops all Pods with a higher number, even if there was nothing wrong with them.

For Axon Server in Kubernetes, the best approach is to use “Singleton StatefulSets”, meaning that each node gets its own StatefulSet, which is never scaled above 1. This way, we still get all the advantages of predictable names and linked storage but are free to give each node a unique identity and state. This may sound as if we throw away the advantage of dynamic availability management that Kubernetes provides. However, in the case of Axon Server, this happens to be what we want because we want the Axon Server cluster to take care of this. If a new client app connects, and the node it connects to already serves several clients, then that Axon Server node will have a full overview of the load on each node in the targeted replication group and can redirect the client to the ideal alternative.

Persistent Data

Axon Server provides CQRS and Event Sourcing applications with an event store that plugs seamlessly into the Axon Framework. Event Sourcing changes the task of maintaining the state of aggregates, from storing and updating the state itself in a regular database to storing and replaying the events that led to this state in an event store. This also changes the storage requirements from needing enough space to only store the space for all aggregates to needing space to store the history of all aggregates, even when their lifespan has ended. This means we gain an immutable history of our aggregate’s life at the cost of an ever-increasing need for storage space. More importantly, we gain a simpler access pattern: replacing an updatable store with an append-only one.

As discussed in the previous section, we need to ensure that Axon Server will use Persistent Volumes for practically all of its data. Still, the event store can benefit from a specialized underlying infrastructure component, given its eventual size. Cloud providers will generally allow you to specify which storage solutions need to be used for a specific volume using a StorageClass specification. Still, you have to be careful because it can also influence access patterns. For example, if you use the default StorageClass, you may lose the option of having multiple Pods connect to the same volume, limiting your options for creating backups.

Another aspect of the storage class might be the accessibility across geographical locations. Shared file-based storage commonly adds a layer of redundancy to enhance availability. Still, it also tends to put restrictions on the number of IOPS because it is a shared medium.

Backups

Using an Axon Server Enterprise Edition cluster, the event store will be replicated among the nodes, which also helps increase its availability. However, if deployed to a single Kubernetes cluster, all nodes could still be physically located in the same Availability Zone or Region. This means that a failure that results in an entire region being lost, even though unlikely, could still lose your data. Furthermore, even if the data is not lost, you won’t access it to build a temporary replacement cluster in another region. So we need to look at backups, preferably in another region.

For backups, there are basically two approaches: copying files and using backup nodes.

Copying Files and File System Backups

The simplest form of event store backups is those where you make a copy of the files. Axon Server provides REST endpoints that can help you in this process:

- The “/createControlDbBackup” endpoint will cause a ZIP file of the Control DB to be stored at a configurable location, which you can then retrieve and store. For Axon Server EE, the “/v1/backup/createControlDbBackup” endpoint will do the same on all nodes of the cluster.

- The “/v1/backup/filenames” endpoint returns filenames of the event store segments that are closed, meaning they will no longer change. This can be requested for any context, both for events and snapshots. The current segment can also be copied, but it will only be partially filled, so you’d preferably also keep a copy of the Control DB from at most the same age to know where to continue.

- The “/v1/backup/log/filenames” endpoint provides a similar list of files in the replication log of a specified replication group.

Using these endpoints, you can make incremental backups of the event store and Control DBs, but you’ll need access to the volumes.

An alternative solution can be simply using snapshots of the disks, using your provider’s facilities to store these in another region. Procedures like a partial restore may be a bit more complicated, but converting the backups into usable volumes is a lot easier. You have to be careful, though, and ensure this uses an incremental strategy, or you’ll end up with increasingly longer backup times.

Backup Nodes

A straightforward approach to creating backups is to use Axon Server’s own replication mechanisms. For example, you can add a remote instance of Axon Server to a replication group in the role of a Passive or Active Backup node.

A Passive Backup Node is a passive follower because it receives all data for the event store but is not a part of the storage transaction. The immediate advantage is that client applications won’t have to wait for the remote site to get a copy of the data. The delay is typically measured in milliseconds, depending on the speed of the network connection to it. If you want to make proper disk backups and shut this remote node down while you do this, the cluster will keep functioning normally. When it comes back online, the current leader will send any data it missed.

If your requirements for having an up-to-date backup are strict, you may want to accept the delay and use a pair of Active Backup Nodes. If a replication group contains one or more of these, at least one must acknowledge storage of data to confirm the commit back to the client. This “at least one” allows us to ensure we have a full backup while still enabling us to shut one down for a file-based backup or to perform upgrades.

Multi-region Clusters

As touched upon several times now, it can be really advantageous to have a cluster spread across multiple regions. Not only for performance when we have users in those different regions, but also improved availability and disaster recovery. Kubernetes is, however, not really designed to have location-aware apps. I’ll admit you could actually do this, using tags in a deployment’s metadata section, but all major cloud providers restrict their managed Kubernetes solutions to a single region.

There is some movement, though, seeing that (for example) Google announced that GKE could have Services span across clusters, which could help achieve this. However, the amount of “handwork” needed to achieve this is still significant and starts with VPC and firewall configurations. So, how to achieve this without sacrificing Kubernetes as your platform will require work outside of the Kubernetes platform. However, this work is mainly to allow for the network connections between the two to work seamlessly.

The Question of a Helm Chart

In the “Running Axon Server” repository on GitHub, I put an example of a Helm Chart for Axon Server SE. So naturally, the question has come up of putting it in an official Helm repository. As the above discussion hopefully made clear, there are a lot of knobs to turn for your deployment, and they are often quite tightly linked to the products of your cluster’s provider. I have seen several charts that solve this by allowing you to provide many details in the values at the cost of very complex “template programming”. Unfortunately, for a cluster of Axon Server EE nodes, where these nodes are each configured as a singleton-StatefulSet, even a “simple” chart will require quite a bit of Go Template language use, with several loops and lots of choices.

I want to start with the following observation about Helm itself: it is a tool for streamlining the installation of Kubernetes hosted applications. The application consists of multiple deployable components. These components (Pods, Services, StatefulSets, etc.) need to have synchronized customizations, while a relatively simple set of values can drive the customizations. You want to do this to upgrade the application by “simply” applying updated deployment descriptors. In this view, the Helm Chart is a tool that captures a set of choices, resulting in a “Good Enough” deployment for most situations. However, more choices are left open. The more complex the chart becomes both to use and to maintain. This becomes exponentially worse if the Chart has to allow for more than just upgrades to a new application version. However, it also has to consider that you may want to redo the installation with different choices while still retaining the data. And then, we apply “lessons learned” to upgrade the Chart itself with more and different choices…

I hope the point I wanted to make here comes across. Tools like Helm and Bosh, but also Terraform and many, many others, are great for getting an automated deployment process. However, once we reach production, the most important thing we want for our infrastructure, and from the perspective of an Axon Framework user, we definitely consider Axon Server a part of that, is a stable situation. Upgrading Axon Server should consider our regional deployment of nodes and respect the replication groups and their need to continue operations while the change is executing.

This means that these tools are most valuable when we initially deploy an application or are in the process of restoring an installation as part of a disaster recovery scenario. They are even more valuable if they can capture standards across installations, allowing us to quickly create templates for new installations based on how these need to fit in with the rest. We want to minimize the changes needed for upgrade scenarios and may even need to add special steps that only apply to that particular upgrade. If we want to change installation details, so a full rebuild of the cluster results in something different from what we have now, the change will need even more care to prevent us from thinking we need a rollback procedure. And nobody wants that. So we always “fix forward”!

So, “It Depends” Wins Again?

The most feared reply from the standpoint of a customer and the most often true one for the consultant is “It depends.” Sure, there are lessons learned and best practices. Still, they rarely result in a detailed and standardized playbook for every situation, if only because no organization that only wants the off-the-shelf solutions is ever called “Acme Inc.”, and no product details page starts with the phrase “Lorem ipsum”.

My personal preference is a set of tools or procedures that automatically replace an Axon Server instance with a freshly built one and do this once per node. Every step checking all nodes is happy before we move on to the next one. I don’t mind manually changing to ensure necessary conversions or updates have been applied first, as long as I can trigger an automated rebuild immediately afterward. This will also allow for updates on the base underneath Axon Server, as in an upgrade of the Java Runtime.

A Public Image for Axon Server SE and EE

We have offered a public Docker image for Axon Server SE on Docker Hub for some time now. The first image was created “by hand” and used a Dockerfile that started Axon Server using a shell script. Environment variables were defined to adjust the configuration easily but resulted in an unnecessarily complex startup. Since version 4.1.5, the build has changed to use Google’s Jib plugin for Maven, which forced us to abandon the startup shell script because the plugin uses an “exploded” installation and starts the application directly. However, environment variables can still be used because Spring Boot allows you to use an all-uppercase version of Java property names, also exchanging the dot for an underscore. Another change was that we explicitly declared two volumes, one for the event store at “/event data” and the other at “/data” for the other files and directories that Axon Server wants to write to.

Although easy to build and use, this caused a few (potential) issues:

- Axon Server is still run as user “root,” which is not just bad from a security point of view but a real problem when you want to use it on OpenShift, which has non-root containers as a policy. Also, the installation directory (in “/”) is not ideal in this respect, as the (virtual) filesystem should conform to Linux standards.

- The (default) distroless images do not provide a shell, which can be a problem in development environments where you want to “go inside” the container to see what is happening.

- Extensions (a feature of Spring Boot executable JAR files) no longer worked. This feature allows you to put jar files in a directory named “exts”, which will then be added to the classpath. We use this in version 4.5 for external authentication/authorization add-ons in Axon Server EE.

- Although we always warned people that the image was for convenience but not intended for production deployments, it was used anyway. Unfortunately, the 4.1.4 to 4.1.5 change in the image immediately caused trouble for those who used “: latest” or upgraded without reading all of the README.

Since the release of Axon Server 4.5, we revamped the build of our internal EE image, and when we feel confident enough with it, we’ll risk a second chance to the public image to fix some of the issues above. The new SE image will run in “/axonserver”, and we’ll build versions based on all 4 distroless variations, which will add “debug” (for the shell), “nonroot” (for an “axonserver” user), and “debug-nonroot” (for both) versions. It will also add “/axonserver/exts” to the classpath and declare a volume for it and add a volume for “/axonserver/config” so you can place a property file in it.

Let’s keep in touch

If you’re not already a member, join the community at “discuss.axoniq.io”. Updates and releases are announced there, and you can ask your questions and help others with theirs. You might also be interested in the PodCasts that are available on the “Exploring Axon” PodBean.